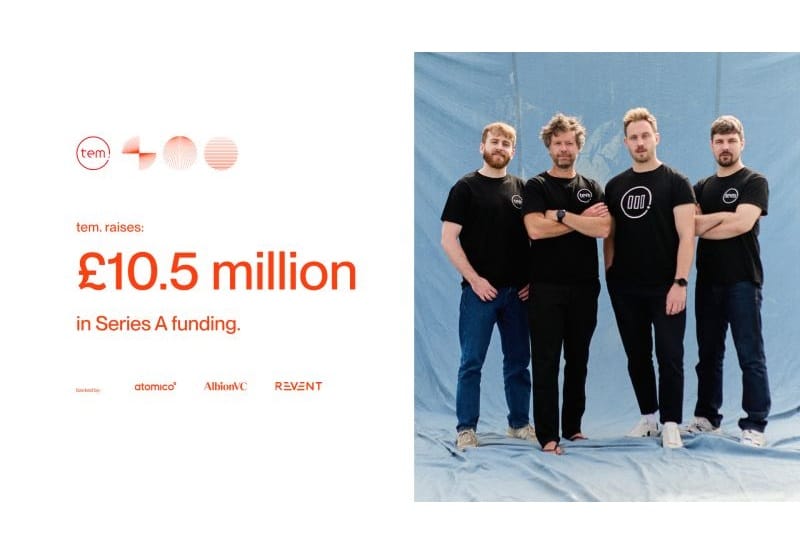

Tem secured $13.7M to disrupt the renewable energy market with AI

London-based startup Tem has raised $13.7 million in Series A funding to expand its AI-powered marketplace that directly connects businesses with renewable energy sources, potentially reducing costs by up to 25% and disrupting the traditional utility model.